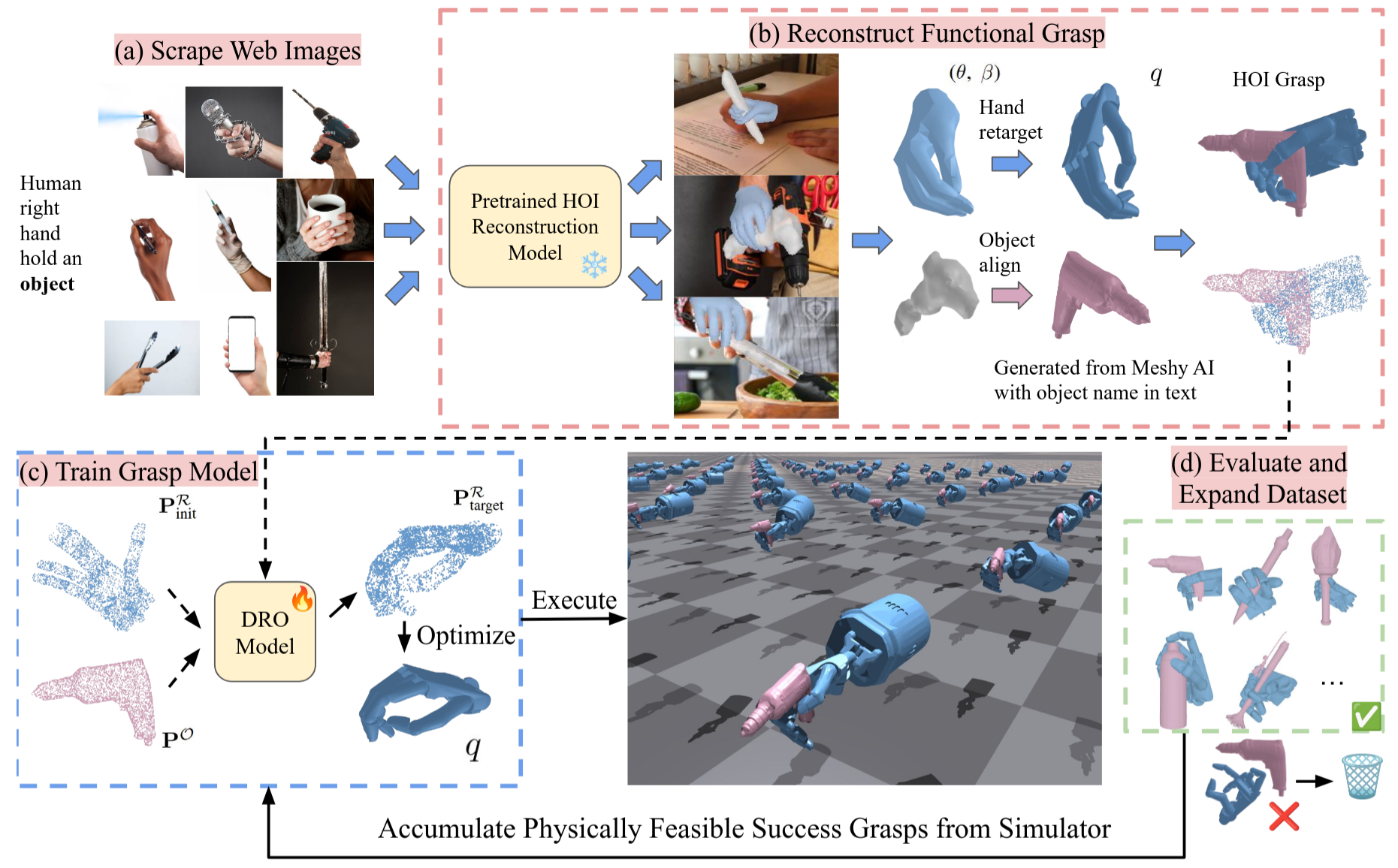

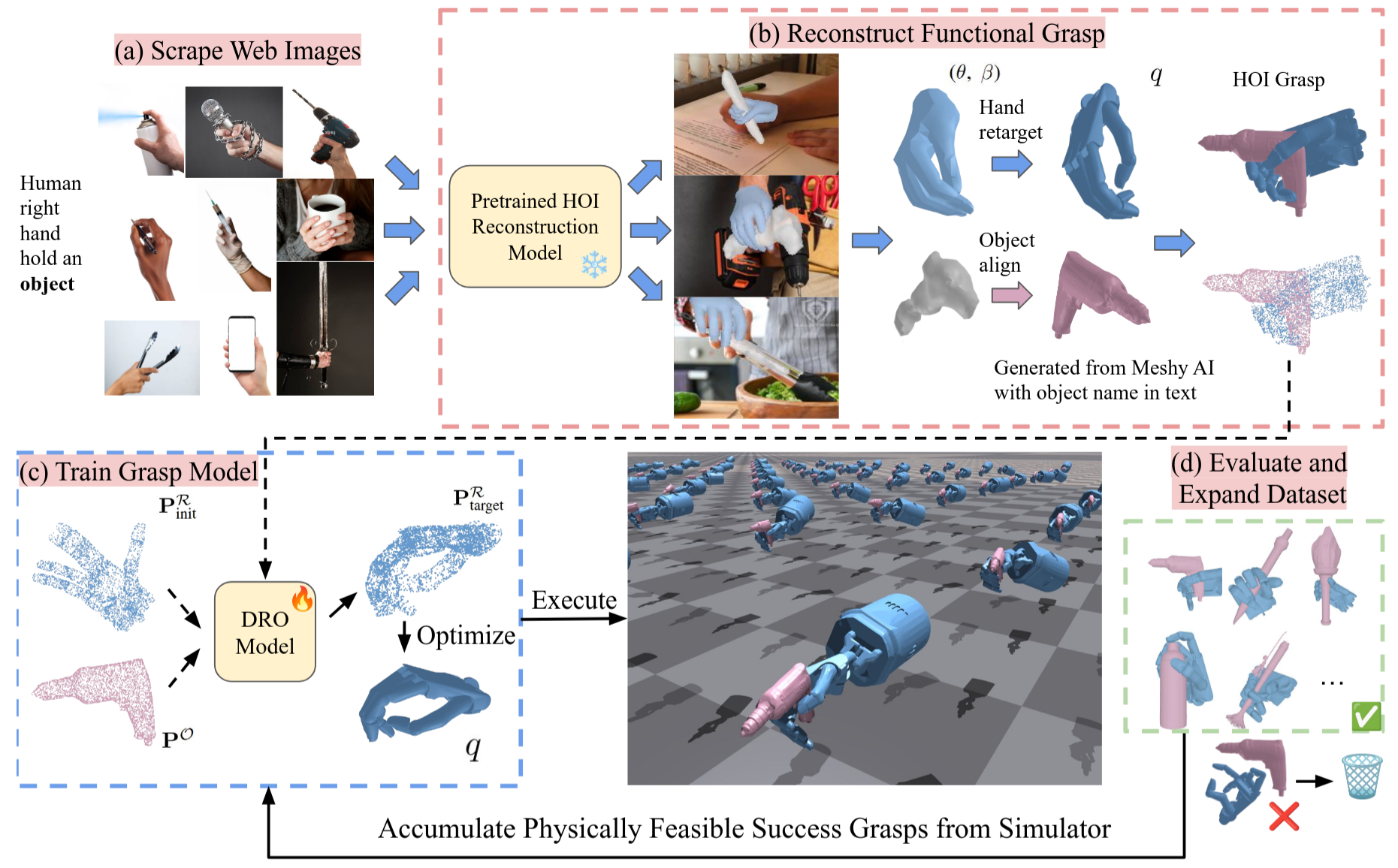

Pipeline Overview

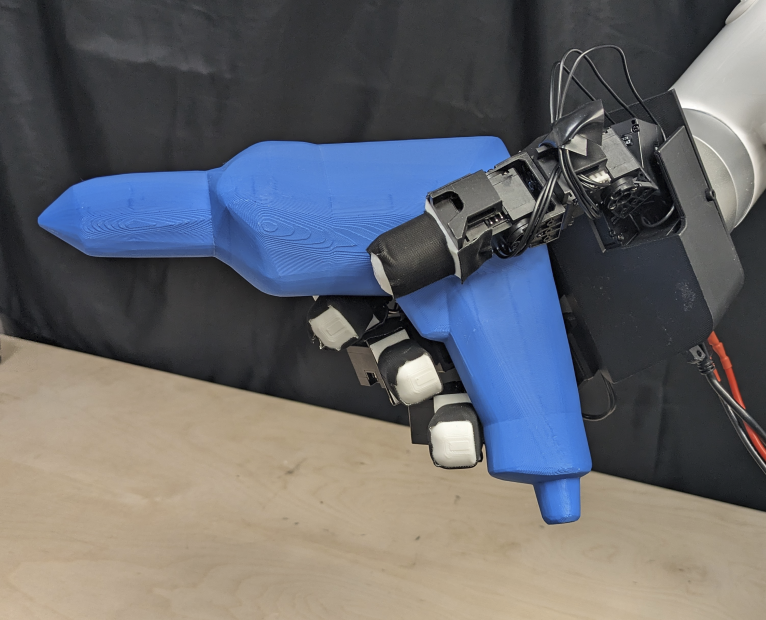

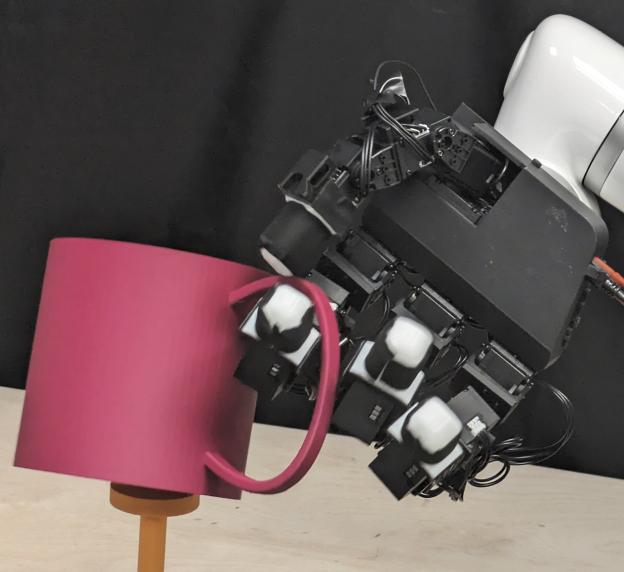

Functional grasping is essential for enabling dexterous multi-finger robot hands to manipulate objects effectively. Prior work largely focuses on power grasps, which only involve holding an object, or relies on in-domain demonstrations for specific objects. We propose leveraging human grasp information extracted from web images, which capture natural and functional hand–object interactions (HOI). Using a pretrained 3D reconstruction model, we recover 3D human HOI meshes from RGB images. To train on these noisy HOI data, we propose to use: 1) an interaction-centric model to learn the functional interaction pattern between hand and object, and 2) geometry-based filtering to remove the infeasible grasps and physical simulation to retain grasps who can resist disturbance. In IssacGym simulation, our model trained on reconstructed HOI grasps achieves a 75.8% success rate on objects from the web dataset and generalizes to unseen objects, yielding a 6.7% improvement in success rate and a 1.8$\times$ increase in functionality ratings compared to baselines. In real-world experiments with the LEAP hand and Inspire hand, it attains a 77.5% success rate across 12 objects, including challenging ones such as a syringe, spray bottle, knife, and tongs.

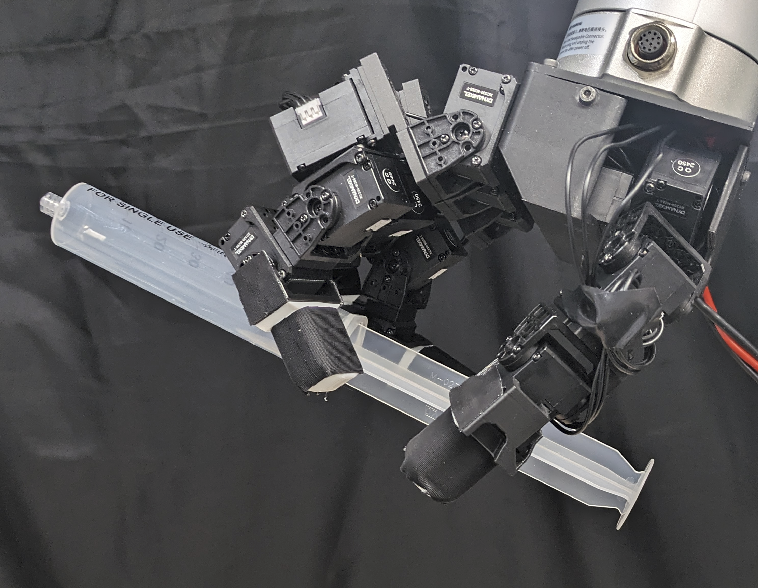

We reconstruct human HOI from web images of humans holding objects, retarget the human hand mesh to the multi-fingered robot, and align the noisy object meshes with 3D shapes generated by the text-to-3D tool Meshy AI.

Grasp 0

Grasp 1

Grasp 2

Using these reconstructed HOI as training data, we train the interaction-centric grasping model DRO. The results show that our model can predict functional grasps using reconstructed HOI from web images, and generalize to held-out unseen objects.

All videos are sped up by 2.25 times

Wine Glass

Tong

Phone

Power Drill

Mug

Microphone

Spray

Syringe

Bowl

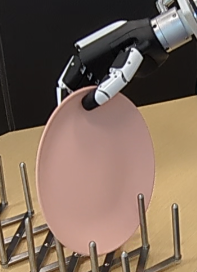

Plate

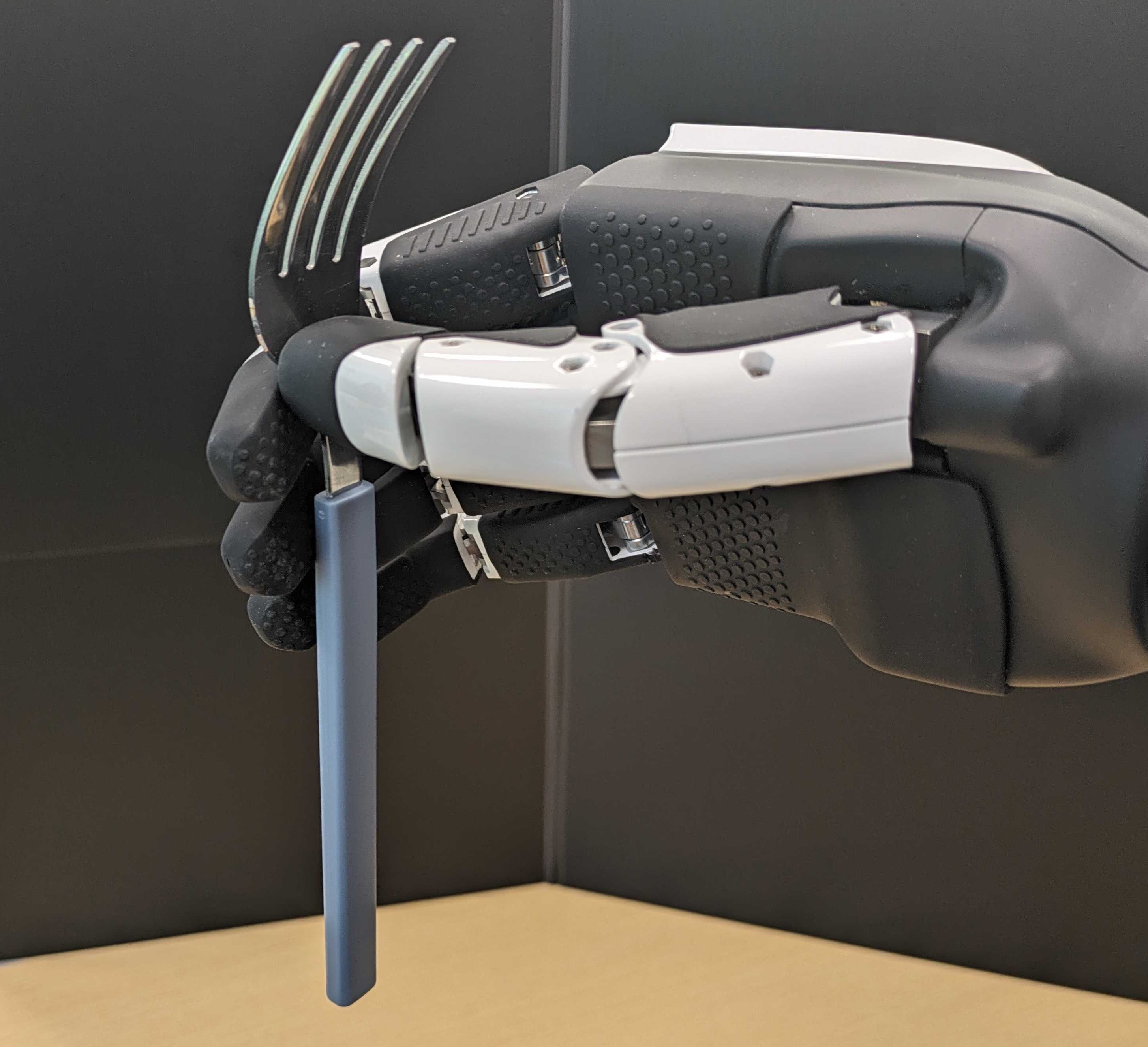

Fork

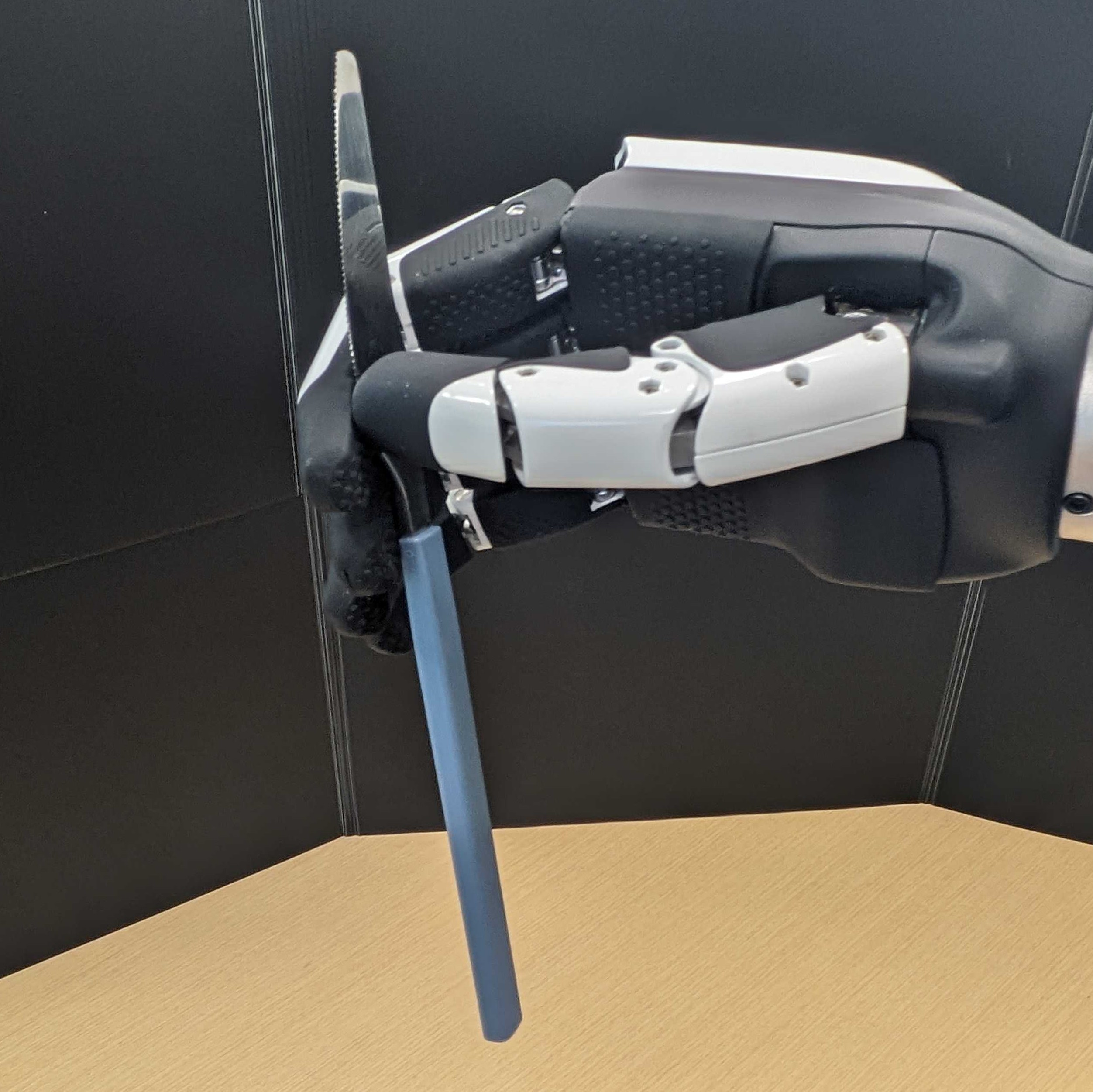

Knife